Machine learning needs to test

Creating reliable, production-level machine learning systems brings on a host of concerns not found in small toy examples or even large offline research experiments. Testing and monitoring are key considerations for ensuring the production-readiness of an ML system,and for reducing technical debt of ML systems. “ - The ML Test Score: A Rubric for ML Production Readiness and Technical Debt Reduction

As machine learning (ML) systems take on more important role in software development on production-level, reliability is one of most things to concert about. Testing is crucial thing on production for correctness, delivering user experience.

In this post, I will summarize some key points of paper and put some personal notes on.

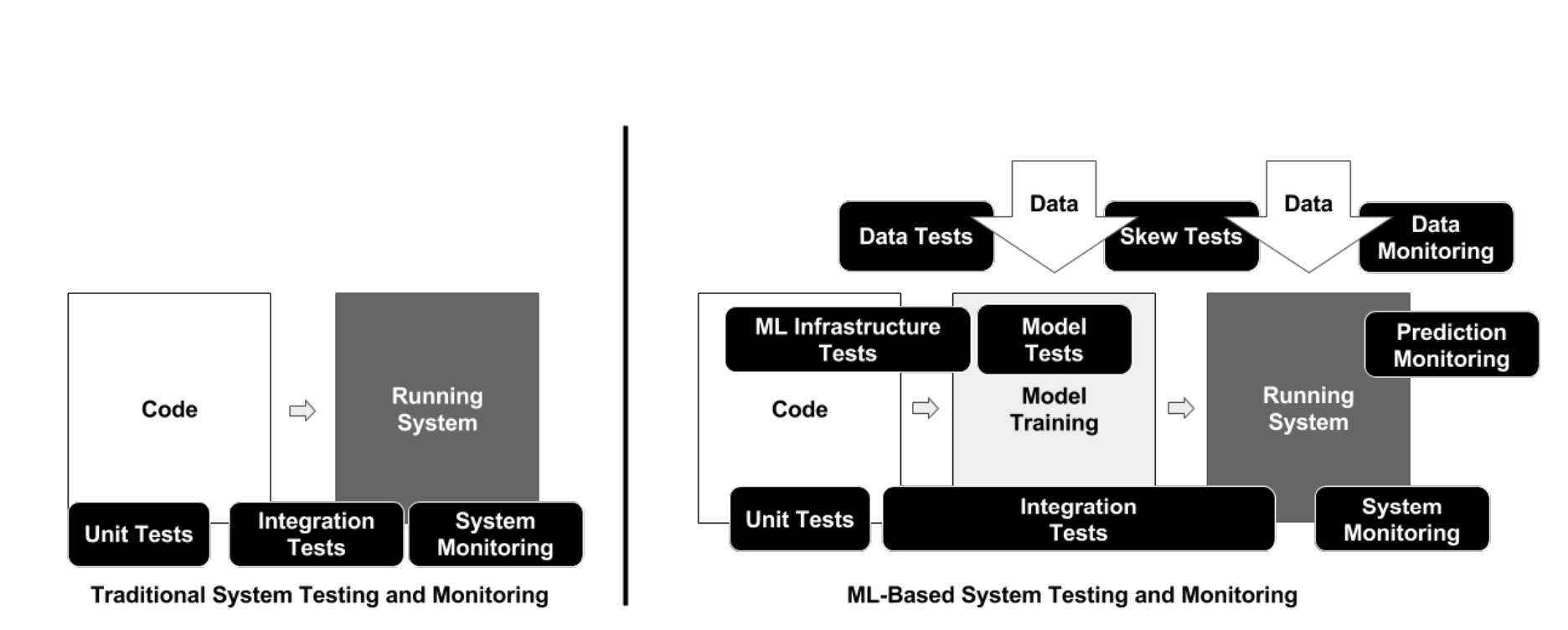

Manually coded system vs ML-based systems

ML system strongly depends both data and code. Training data needs to consider like code such as versioning, testing and trained ML needs production practices like a binary such as debuggability, rollbacks and monitorings. So beside regular software tests such as unit tests, integrations tests, system monitoring, we need to add more tests about data, trained model

Tests

Data

- Feature Expectations are captured in schema

- All features are beneficial

- No features cost is too much

- Features adhere to meta-level requirements

- The data pipeline has appropriate privacy controls

- New features can be added quickly

- All input features code is tested

Model Development

- Model specs are reviewed and submittedµ

- Offline and online metrics are correlated

- All hyper-parameters are tuned

- The impact of model staleness are known

- A simpler model is not better

- Model quality is sufficient on important slices

- Model is tested for consideration of inclusion

ML Infra

- Training is reproducible

- ML specs are unit tested

- ML Pipeline is integration tested

- Model quality is validated before serving

- The model is debuggable

- Models are canaried before deploying

- Serving models can be rollbacked

Monitoring

- Dependency changes results in notification

- Data invariants hold for input

- Training and serving are not skewed

- Models are not stale

- Models are numerically stable

- Computing performance has not regressed

- Prediction quality has not regressed

How to calculate score

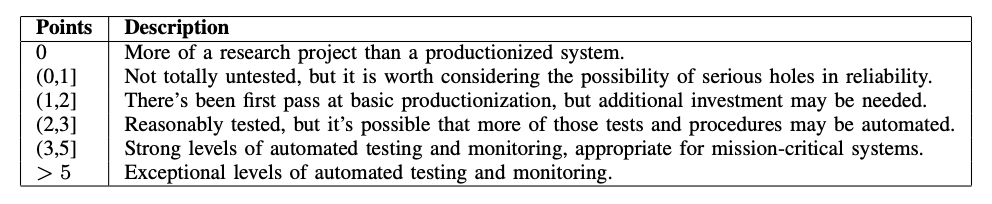

The final test score is computed as follows:

- For each test, half a point is awarded for executing the test manually, with the results documented and distributed

- A full point is awarded if there is a system in place to run that test automatically on a repeated basis

- Sum the score for each of the 4 sections individually

- The final ML Test Score is computed by taking the minimum of the scores aggregated for each of the 4 sections

Interpretable of score after calculated

Conclusion

We just go through how ML systems can be tested on production, from data to monitoring to make sure its functional well. In next blog, I will walkthrough and write some codes to demonstrate the ideas of ML testing.

Stay tuned